Digital

Our approach to testing

January 8, 2016 by craigmilligan 2 Comments | Category Digital Public Services, mygov.scot

This is a post by Rajesh Yarlagadda and David Vidal, our test engineers.

This post is the third post in our series covering how we approach continuous delivery, covering our approach to testing.

Testing is an essential component of each and every phase of the mygov.scot development process, with quality being “baked in” to the product at every stage. This blog post provides an overview of how we approach testing, and some of the tools we use. Feel free to get in touch if you’d like to know a bit more about a particular technology or our reasons behind using it.

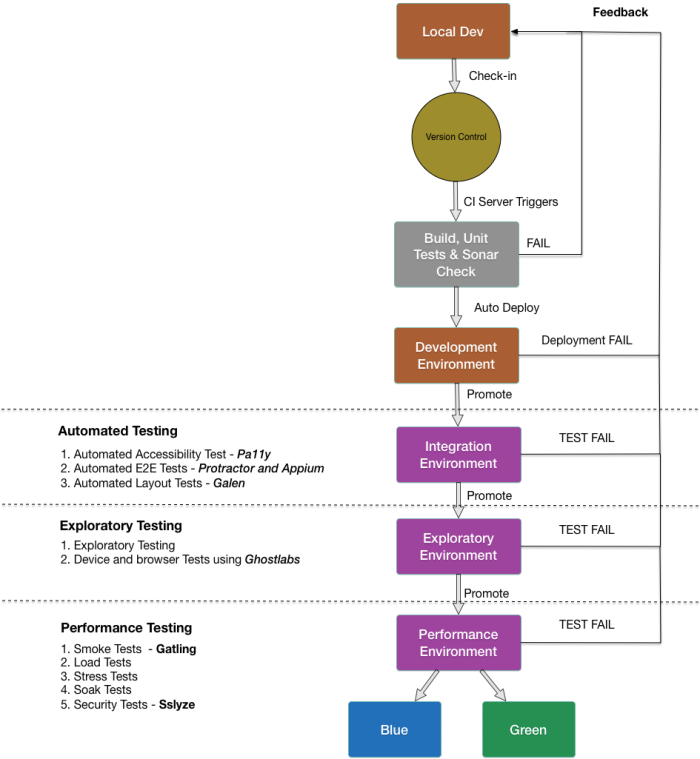

After creating a new feature to meet a particular user need, our developers submit changes to our version control system – this can include source code, environment modifications and configuration files.

Following a commit to the version control system, the continuous integration server will do the following:

- Trigger a build

- Run automated unit tests

- Perform sonar code quality check

- Automatically deploy the successful build to a development environment

The test engineers manually promote the successful build to the integration test environment using an automated deployment job. The integration test environment is designed to run all automated tests i.e. accessibility tests, End to End (E2E) tests and browser layout tests. If a feature change completes all automated tests with no failures then it will be promoted to the exploratory test environment.

The exploratory test environment is designed to run session-based exploratory testing and browser testing on multiple devices using the ‘Ghostlabs’ software. On completion of successful exploratory testing, feature changes will be promoted to the performance test environment.

The performance test environment is designed to run performance tests i.e. smoke tests, load tests, stress tests, soak tests and security tests. On completion of testing, the feature changes will be demonstrated to the product owner and other stakeholders.

- Smoke – check to see that there has been no significant drop in performance between builds

- Load – check that the site can handle an acceptable level of users (currently set to 1,000)

- Stress – check that the site can either handle or break gracefully for an extended number of users (currently set to 5,000 users)

- Soak – check that there are no memory leaks over a longer period (currently set to a day)

On completion of the demo, testers record the component versions and save this as the ‘last known good version’, after which relevant artefacts are pushed to the build repository server.

Every Monday, the mygov.scot team will create either a blue/green off-line production environment using the ‘last known good version’. Smoke testing is carried out on the off-line environment before it is switched over to being the live, production version.

Types of test

There are many different types of test we perform – the starting point is the user story, written for each new feature before work begins. Before a feature is even validated against the user acceptance criteria we validate against system quality attributes – performance, accessibility and security. Once we complete these tests the next stage is exploratory testing, to validate the story.

We look to ensure that we have:

- Complete functionality on a range of different browsers. This includes Internet Explorer (versions 8 to 10 running on Windows 7), Chrome (running on Ubuntu), Safari (on Mac), native browsers on iOS devices (versions 6 to 9) and native browsers on Android (versions 2.2 – 5.1).

- HTML code in line with WCAG 2AA accessibility standards, tested via the open source tool Pa11y

- A good level of readability, tested via ChromeVOX

- A secure site through automated security tests performed by ZAP, which scans and validates the site against the top 10 attacks (as identified by OWASP)

- Consistent visual standards and UX design, tested via the GALEN framework layout testing which captures an image of the page if elements of the page are not where they should be.

Improvements

Testing takes time – performance tests run in the background and can take up to a day to run per test, manual tests take several hours per day. With every new test added the quality of our work goes up, with every test automated or moved to an earlier stage in the process we can spot and correct issues earlier. We’re working to include performance testing as part of our continuous integration server process as this will allow us to spot code which has the potential to slow the site down and correct it much earlier.

Security testing is currently more reliant on manual processes and is something we are also working to increase the automation on. We are also looking to expand the number of devices and browsers that we test on – we cover a good number already and we are looking to up that number.

Follow the team via @mygovscot on Twitter for more updates. Want to comment? Let us know below!

Tags: Technology & Digital Architecture

Hi, this is really useful. Could I ask whether Scottish Digital have a bank of user testers that can be called upon to do end to end testing of products? Many thanks, Craig

Hi. Thanks for your question.

Not as such, user research for our projects is planned according to the profile that is needed for user testing to ensure the users have relevant or recent experience to draw on. We do use a recruiter for the majority of our testing who will recruit to a profile we outline.

Reply sent by Digital Directorate comms team.